Queryloop RAG series, Part 1: Introduction to Retrieval

Visualizing retrieval: A text example

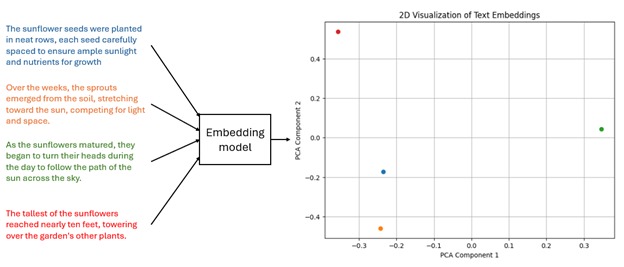

Let's assume we have the following paragraph that has been sentence-wise chunked, vectorized and stored in a vectorDB.

“The sunflower seeds were planted in neat rows, each seed carefully spaced to ensure ample sunlight and nutrients for growth. Over the weeks, the sprouts emerged from the soil, stretching toward the sun, competing for light and space. As the sunflowers matured, they began to turn their heads during the day to follow the path of the sun across the sky. The tallest of the sunflowers reached nearly ten feet, towering over the garden's other plants.”

To visualize the embeddings in 2-D we will use principal component analysis (PCA) to reduce dimensionality while attempting to preserve as much characterization as possible.

OpenAI text-embedding-3-small:

We will first experiment with OpenAI ‘text-embedding-3-small’ embedding model that has a dimensionality of 1536. The following figure shows the vector embeddings of the given sentences reduced to 2-D

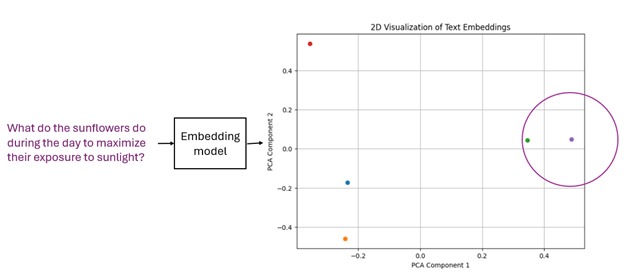

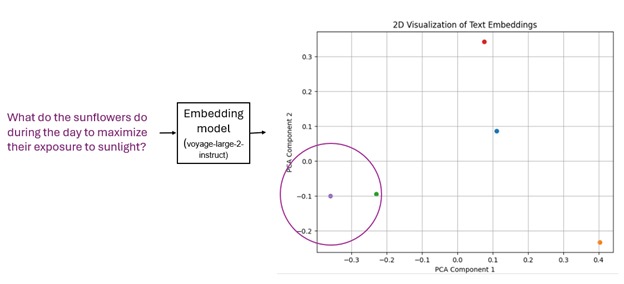

Let us ask the question ‘What do the sunflowers do during the day to maximize their exposure to sunlight?’ . This question would also get vectorized and its distance will be calculated with the existing vector embeddings. If we choose to retrieve the top most matching vector, the sentence ‘As the sunflowers matured, they began to turn their heads during the day to follow the path of the sun across the sky’ will be retrieved

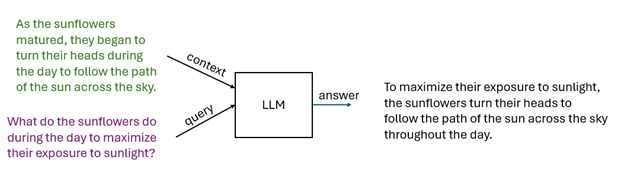

This retrieved context can then be passed to an LLM along with the query for retrieval augmented generation

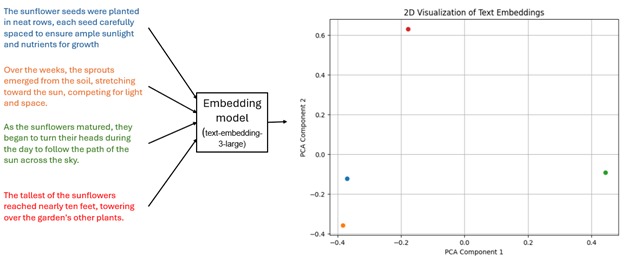

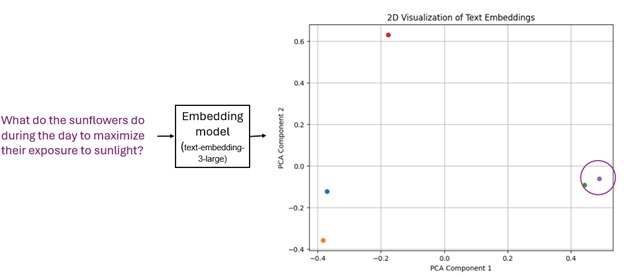

OpenAI text-embedding-3-large:

Following is an illustration of the vector retrieval if we use the OpenAI text-embedding-3-large with a dimensionality of 3072. It can be seen that a bigger embedding model is able to capture more nuances and hence there is greater similarity between the query and the relevant context as compared to the 1536 dimensional text-embedding-3-small model.

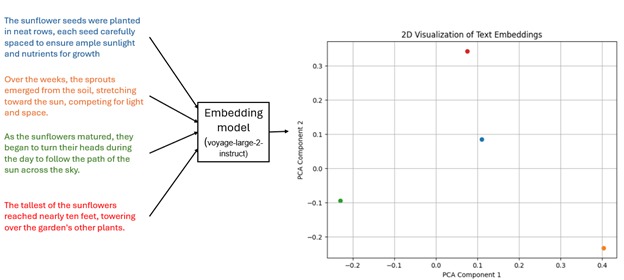

Voyage-large-2-instruct:

Retrieval example with Voyage AI embedding model ‘voyage-large-2-instruct’ is illustrated below. Voyage-large-2-instruct has a dimensionality of 1024.

What is RAG?

Retrieval Augmented Generation (RAG) is a technique that combines retrieval of external information with a language model's ability to generate text. In this approach, when a user poses a question, the system retrieves relevant information from a large knowledge base. This retrieved knowledge together with the user query is then provided to an LLM, which uses this context to generate an informed response. The retrieval component is crucial as it addresses the challenge of identifying useful information from a vast dataset, typically using similarity searches based on vector embeddings.

How is retrieval performed?

To start with, the documents to be queried over are divided into sections and vectorized. The vector representations for each document section are stored in a vector database along with the original section content, stored as metadata. When a user submits a query, it is transformed into an embedding using the same model that created the document embeddings. Nearby embedding representations are then retrieved from the vector database using a distance metric such as Euclidean distance or cosine similarity. The data corresponding to these embeddings is then processed further downstream.

What are vector embeddings?

Vector embeddings are a way to represent data in a format that machine learning models can understand. If you can associate 𝑛 different attributes with a piece of data, where each attribute can take on a real number value, then the values for those 𝑛 attributes would constitute the 𝑛-dimensional embeddings for that data. Data points that share similar attributes will thus have vector embeddings that are close to each other in the 𝑛-dimensional space. In the case of text embeddings, chunks of text that are closer in meaning or context have vector embeddings that are closer to each other in the 𝑛-dimensional vector space. This proximity is often quantified using distance metrics like Euclidean distance or cosine similarity.

It should also be noted that the transformation from a data point to an embedding vector is generally an irreversible process; given a vector embedding, it is typically not possible to perfectly reconstruct the original data point that was fed into the embedding model. This irreversibility stems from the fact that during the dimensionality reduction process, some information is inevitably lost, making it impossible to perfectly reconstruct the original data from the embeddings.

What is an embedding model?

An embedding model often utilizes transformer architecture. The training process for such a model focuses on converting data into vector representations, strategically positioning similar data points closer together within the vector space. The success of this approach, particularly in how effectively these embeddings capture and reflect meaningful relationships, heavily relies on the quality of the training data and the specific characteristics of the model employed to learn these embeddings. This relationship underscores the importance of both high-quality input data and a robust model design in achieving optimal performance in tasks involving embeddings.