Customer Data Analysis

Preparing Data

Before we start Analyzing our customers data using Queryloop, let's get your data ready! Queryloop works best with data in a specific format to ensure smooth analysis.

Data Format

CSV Format: Save your customer data in a Comma-Separated Values (CSV) file. This is a widely used format that Queryloop can easily understand.

Setting up Proper Headers: The first row of your CSV should act like a signpost, labeling each column of data. These headers will help Queryloop identify what kind of information each column contains (e.g., "Customer Name," "Product Purchased," "Review Text").

Defining Data Types: While not strictly required, defining data types for each column can further enhance your analysis. For example, marking a column as "Date" or "Currency" allows Queryloop to perform date-specific calculations or filters

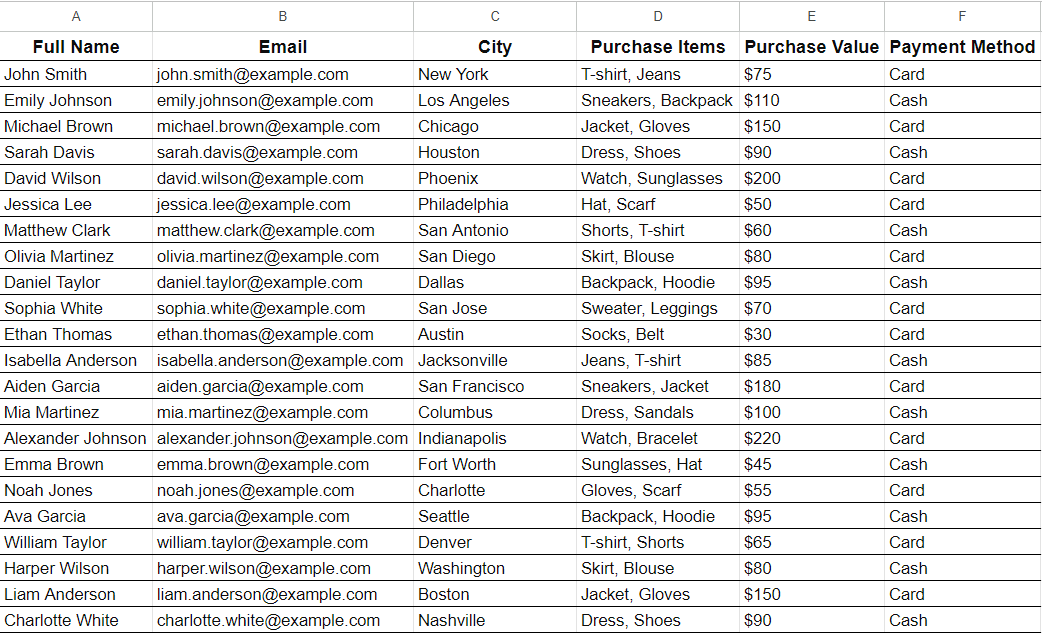

Dataset Preview

Defining Problem Statement and Prompt Generation

The next step is defining the problem statement this will help Queryloop in generating a better Prompt for its Large Language Models.

Think of this problem statement as a roadmap for Queryloop. The clearer you define the specific question you want to answer or the insight you're seeking, the better Queryloop can generate a base prompt for the LLM. This base prompt sets the context for the LLM, guiding it towards understanding your customer data and delivering the most relevant results.

- Note: Ensure your problem statement aligns with the data available in your CSV file. (Don't ask questions that your data can't answer).

Prompt Generation:

Generating Prompt is as simple as clicking a button. You just need to click "Generate Prompt" button and Queryloop will automatically generate a base prompt that will give context to the LLM about the Problem you want to solve.

Ideal responses

These golden nuggets serve as a reference point for evaluating the accuracy and quality of the responses generated by the large language models (LLMs) in Queryloop.

Think of ideal responses as the "perfect answers" to your problem statement. They represent the kind of insights or information you'd ideally extract from your customer data. By providing these ideal responses, you create a gold standard for Queryloop to measure the performance of the LLMs in each experiment.

Best Practices for providing Ideal Responses

-

Align with your problem statement: Ensure your ideal responses directly address the specific question you posed earlier. Be clear and concise: Just like your problem statement, ideal responses should be easy to understand and unambiguous.

-

Consider different formats: Depending on your question, ideal responses could be summaries, trends, specific data points, or even visualizations.

-

Provide multiple examples: Don't limit yourself to a single ideal response. Offer several variations to capture the range of potential insights you might be looking for.

Selecting Hyperparameters for Retrieval Augmented Generation (RAG)

Here, you'll have the option to fine-tune the analysis by selecting specific hyperparameters. These are like dials on a machine, allowing you to adjust the process for optimal results.

-

Chunk Size: This controls the size of data snippets fed to the LLM at a time. Think of it as bite-sized pieces of information. You can choose from Tiny, Small, Medium, or Large chunks. Selecting a smaller chunk size might be helpful for highly detailed analysis, while larger chunks can work well for broader insights.

-

Metric Type: This determines the algorithm used to identify relevant data chunks from your customer data. Options include Cosine, Dot Product, Euclidean, and Hybrid. Each has its strengths, and Queryloop's defaults are generally effective. But, experimenting with different metric types can yield interesting results.

-

Retrieval Method: This defines how Queryloop retrieves relevant data chunks based on your problem statement. Basic retrieval is a good starting point. More advanced options like Sentence Window, Multi-Query, and HyDe offer greater control over the retrieval process.

-

Re-rank: After initial retrieval, Queryloop refines the results with "Basic" or "Maximal Marginal Relevance (MMR)" techniques – ensuring the most relevant parts are fed to the LLM.

More Re-rank methods are coming soon.

-

Top K: This sets the number of relevant chunks retrieved for analysis (1, 5, 15, or 20).

-

LLM: Choose your language model! Queryloop offers options like "gpt-3.5-turbo" and "gpt-4," with "Fine Tuned LLMs" coming soon for even more specialized tasks.

More Large Langauge Models are coming soon.

- Embedding Model: This translates your text data into a format the LLM can understand. Choose from pre-trained models like "text-embedding-ada" or wait for upcoming "Fine Tuned Models" for tailored analysis.

More Embedding Models are coming soon.

Creating Combinations

Click the Create Button: This generates all possible combinations based on your chosen hyperparameter settings. Think of it as creating a grid of options to explore.

-

Initializing Environment: This step saves all the data in Vector Database and creates necessary indexes.

-

Run All: By pressing this button each combination is executed and Ideal Responses provided by you are compared with LLM generated responses with the Evaluation.

Evaluation

Let's explore how Queryloop measures the success of each experiment in the Evaluation tab. Here, you will learn the key metrics that determine the effectiveness of your customer data analysis:

-

Overall Accuracy (out of 10): This score combines the performance of all individual metrics, providing a quick overview of how well each experiment aligns with your ideal responses.

-

Feedback: This qualitative assessment offers insights into how closely the LLM-generated response resembles the ideal response in terms of style, tone, and overall completeness.

-

Retrieval Score (0-2): This metric evaluates whether the retrieved data chunks from your customer data contained enough information to answer your question effectively. Scores range from:

-

1: No relevant information found in the retrieved data.

-

2 (Partial): Some relevant information was present, but not everything needed for a complete answer.

-

2 (Complete): All the necessary information was successfully retrieved for analysis by the LLM.

-

-

Correctness (0, 1, 2): This metric judges how well the LLM's response aligns with the information provided in your ideal response. Scores range from:

-

0: The response lacks relevant information or contains minimal details from the ideal response.

-

1: The response includes some, but not all, of the key points from the ideal response.

-

2: The response perfectly matches the information and insights presented in the ideal response.

-

-

Prompt Adherence (0-1): This metric assesses how faithfully the LLM followed the instructions you specified during experiment creation. This includes adhering to desired tone, returning relevant sources, and prioritizing recent documents in the analysis.

-

Response Length (0, 1): This metric evaluates whether the LLM's response adheres to your length preferences. Scores indicate:

-

0: The response significantly deviates from your desired length (too short or too long).

-

1: The response aligns well with your preference for brief, concise, or detailed responses.

-

-

Faithfulness (0, 1): This metric determines how closely the generated response relies on the provided context. A score of:

-

0: Suggests the LLM might be creating responses independently, without referencing the retrieved data chunks.

-

1: Indicates the response is well-grounded in the information provided, ensuring a more reliable and insightful analysis.

-

Experiments - Chat Playground

Evaluation doesn't here. Queryloop provides you Chat Playground to further refine and test your analysis with even more freedom.

In Evaluation Tab click on Run Other Questions to open Chat Playground.

Think of it as a sandbox environment for your experiments. Here, you can, Test Different Questions. Go beyond your initial golden responses and explore other relevant questions you might have about your customer data using the same experiment configuration. This allows you to evaluate a combination's versatility and its ability to handle diverse queries.

Prompt Optimization

After evaluating your experiments and identifying the most promising combination, it's time to explore Prompt Optimization. This powerful feature in Queryloop empowers you to further refine the base prompt used by the LLM, ultimately leading to even more insightful results from your customer data analysis.

- Accessing Prompt Optimization: Simply navigate to the dedicated "Prompt Optimization" tab within Queryloop.

Selecting the Best Combination

Click on the experiment configuration that yielded the most accurate and insightful results based on your evaluation (refer back to the Evaluation section for details on metrics).

Exploring the Prompts Section

-

Once you've selected the best combination, click "Next" to proceed to the "Prompts" section.

-

Here, Queryloop will automatically generate multiple variations of the base prompt using various techniques like mutations and "chains of thought." These variations aim to improve the LLM's understanding and response generation capabilities.

-

You'll see a list of 10 different prompts derived from the original base prompt.

Selecting the Most Relevant Prompts

-

You have the flexibility to:

-

Select All Prompts: If unsure which prompts might be most effective, you can choose all 10 for further evaluation.

-

Select Specific Prompts: Carefully review each prompt and select only the ones that seem most relevant and well-suited to your specific problem statement and desired analysis.

-

-

Clicking "Next" after selecting prompts takes you to a section similar to Retrieval Optimization, but now incorporating the chosen - prompts into each experiment configuration.

-

This allows you to see how the different prompt variations interact with the various retrieval settings within your chosen experiment combination.

Running and Evaluating

-

Once you've selected your prompts (all or specific ones), click "Run" to initiate the analysis using the refined prompts and chosen experiment configuration.

-

After the analysis is complete, navigate to the Evaluation section (as described previously) to assess the performance of each prompt variation.

-

Don't forget to leverage the Chat Playground again! Just like in Retrieval Optimization, you can use the Chat Playground to test different questions with the newly optimized prompts and gain a deeper understanding of their effectiveness.

By following these steps and iteratively optimizing your prompts, you can significantly enhance the quality and accuracy of the insights derived from your customer data analysis in Queryloop. Remember, the more you refine your prompts, the more precise and powerful your LLM-generated responses will become.

Deploying Your Optimized Customer Data Analysis LLM Application

Congratulations! You've successfully navigated the evaluation and optimization process, and now it's time to deploy Customer Data Analysis LLM Application.

Head over to the "Deployment" tab within Queryloop. Here, you'll only see experiment configurations that have undergone Prompt Optimization and are deployable for real-world use.

-

Deployment Options: Click on the "Deploy" button next to the desired combination to initiate the deployment process. Now your optimized analysis is ready to be accessed!

-

Testing with Chat Playground (Optional): Before fully deploying the analysis, you can leverage the "Run" button associated with each deployed combination. This opens the familiar Chat Playground, allowing you to test your chosen configuration with additional questions and solidify your confidence in its performance.

Deployment Options Beyond Queryloop:

-

Queryloop offers various deployment options to suit your needs:

-

Queryloop Platform: Deploy your analysis directly within Queryloop, providing a user-friendly interface for accessing insights.

-

External Applications: Click on the "Generate API" button associated with a deployed combination. This generates an API key that allows you to integrate your analysis with your own custom applications, extending its reach beyond Queryloop.

-

Stay tuned for even more deployment options in the pipeline! Queryloop is actively developing a platform integration tool, enabling seamless connection of your deployed analysis with popular communication platforms like Slack, Discord, Whatsapp, Telegram and more.