Optimizing Information Retrieval

The Retrieval Optimization tab helps you fine-tune how your application searches through and retrieves information from your documents. By testing different configurations, you can identify the optimal settings for your specific use case, dramatically improving the accuracy and relevance of your application's responses.

Understanding Retrieval Optimization

Effective information retrieval is vital for applications that need to access and utilize specific information from large document collections. The Retrieval Optimization process tests combinations of parameters such as:

- Chunk Size: How documents are divided for processing

- Metric Type: How similarity is calculated

- Retrieval Method: Technique used to find relevant information

- Reranker: Method for refining search results

- Top K: Number of results to retrieve

- Embedding Model: The model that creates vector representations of text

By systematically testing these parameters, you can identify the configuration that delivers the most accurate and relevant information for your specific use case.

Preparing for Optimization

Before running experiments, you may need to adjust your application settings and prepare additional test data:

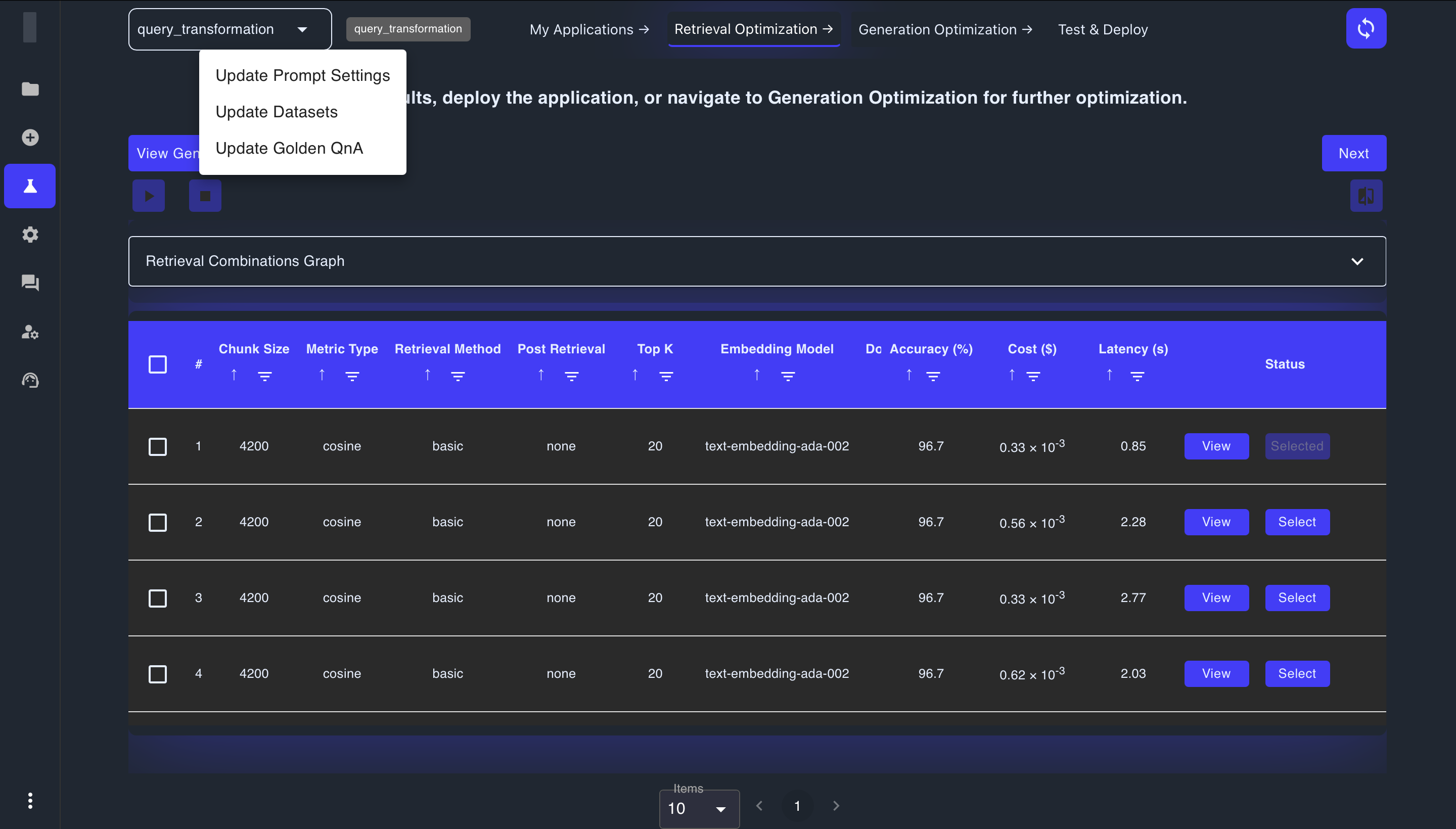

Updating Your Application

The dropdown menu at the top of the application provides options to update specific components:

- Update Datasets: Add or modify the document collection your application uses

- Update Golden QnA: Add or edit benchmark question-answer pairs used for testing

These options help ensure your application has the right data and evaluation criteria before optimization begins.

Adding Golden QnA Pairs

Golden Question and Answer pairs serve as benchmarks for testing retrieval performance:

- Click Add Golden QnA to create new benchmark test cases

- Enter a question that your application should be able to answer

- Provide the expected answer based on your document collection

- Save the QnA pair to include it in your testing set

Golden QnAs are essential for objective performance evaluation, allowing you to measure how well each configuration retrieves the correct information.

Adding Additional Data

You can enhance your document collection by:

- Clicking Add Data to upload new documents

- Adding documents that contain information relevant to your test cases

- Ensuring your collection is comprehensive enough to cover your application's domain

A diverse and representative document collection improves the quality of your optimization process.

Running Retrieval Experiments

The experimental process follows a structured workflow, allowing you to compare different parameter combinations and their performance:

Step 1: Initialize Experiments

Begin by preparing your experimental environment:

- Click the Initialize Combinations button (Play Button)

- The system will prepare your data by:

- Processing documents

- Creating vector embeddings

- Setting up the retrieval infrastructure

- Preparing parameter combinations for testing

During initialization, you'll see one of these status indicators:

- Queued: Waiting for processing resources

- In Progress: Actively preparing your environment

- Completed: Ready to run experiments

- Failed: Encountered an issue during setup (check your data and settings)

Step 2: Select and Run Combinations

Once initialization is complete:

- Review the available parameter combinations in the table

- Check the boxes next to combinations you want to test

- Start with diverse combinations to explore the parameter space

- Include different embedding models for comprehensive testing

- Click Run Selected Combinations to begin the evaluation

Each combination will be tested against your Golden QnA pairs, with results showing how effectively the configuration retrieves relevant information.

Status indicators for each combination include:

- Queued: Waiting to be processed

- Running: Currently being evaluated

- Completed: Evaluation finished, results available

- Failed: Error during evaluation

Pro Tip: You can abort running experiments by clicking the Abort button if you notice issues or want to make adjustments.

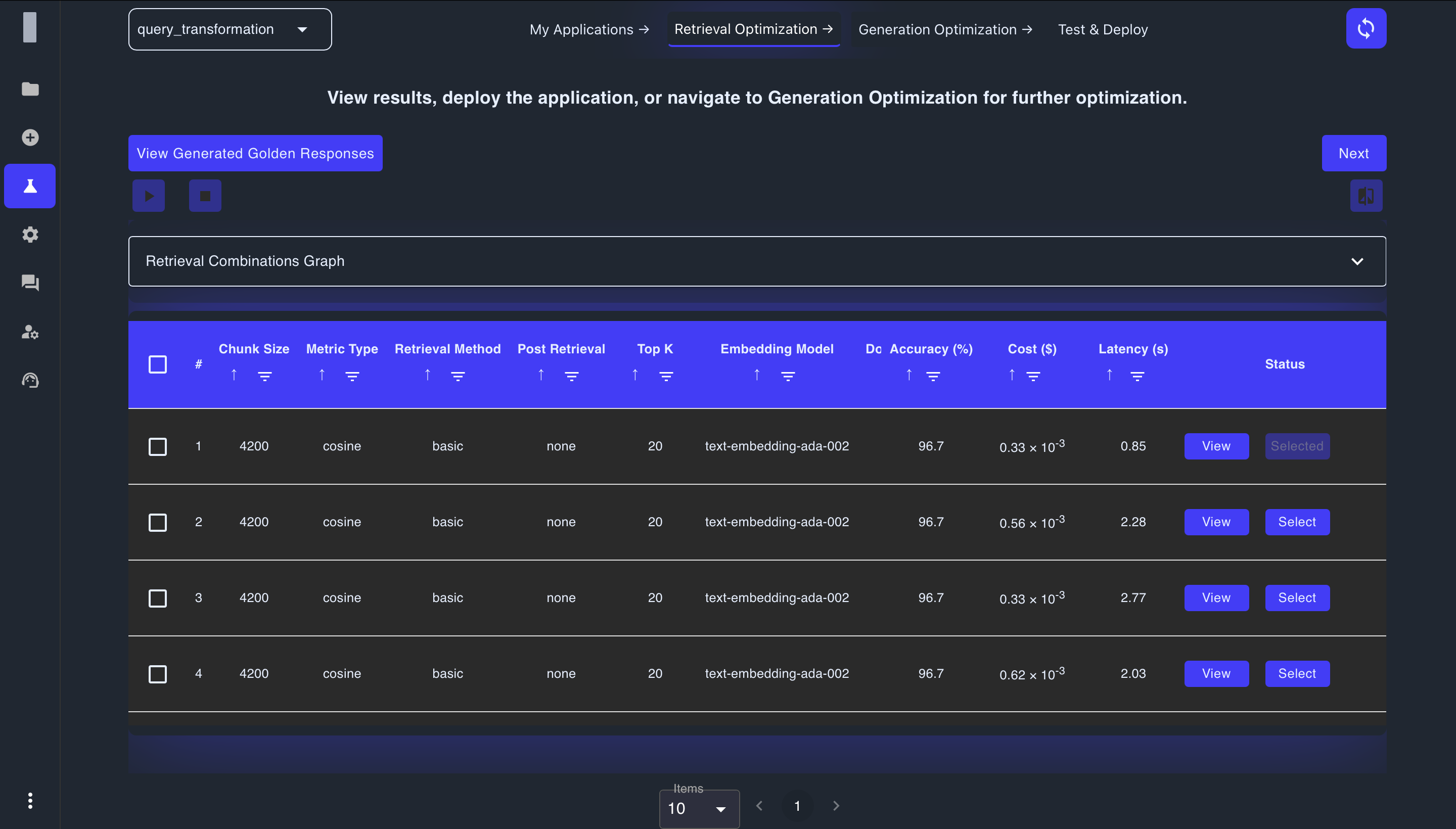

Analyzing Retrieval Results

After your experiments complete, analyze the results to identify the best-performing configurations:

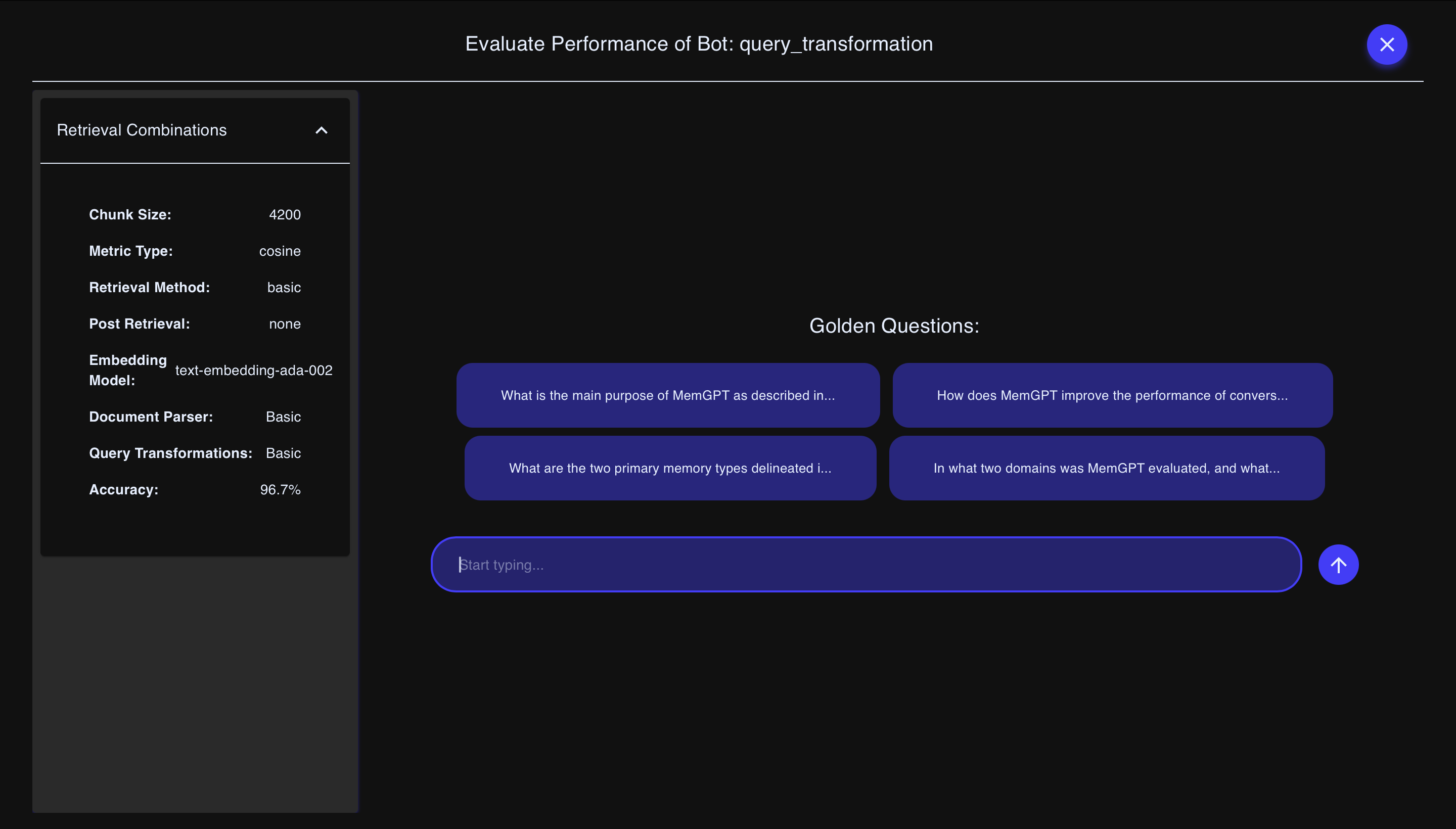

Performance Metrics

The combinations table shows several key metrics to help you evaluate performance:

- Accuracy (%): Percentage score indicating how accurately the configuration retrieved relevant information

- Cost ($): The computational cost represented in dollar value (shown in scientific notation, e.g., 0.33 × 10⁻³)

- Latency (s): Response time in seconds, indicating how quickly results are returned

- Status: Current state of the combination (Selected, Ready to select)

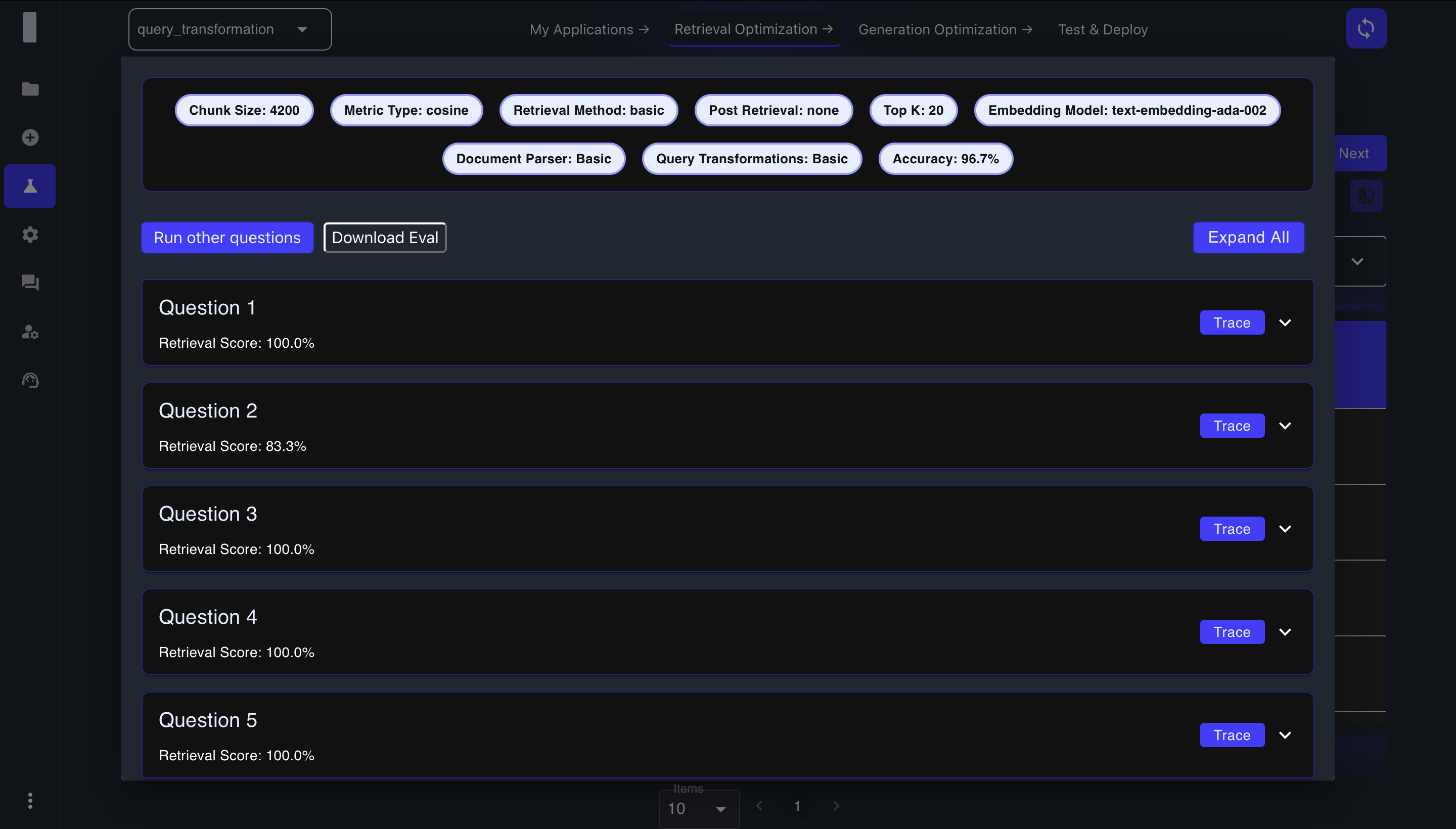

In the detailed view, you'll see additional metrics for each test question:

- Retrieval Score: Percentage indicating how completely the required information was retrieved

- Feedback: Qualitative assessment of retrieval quality

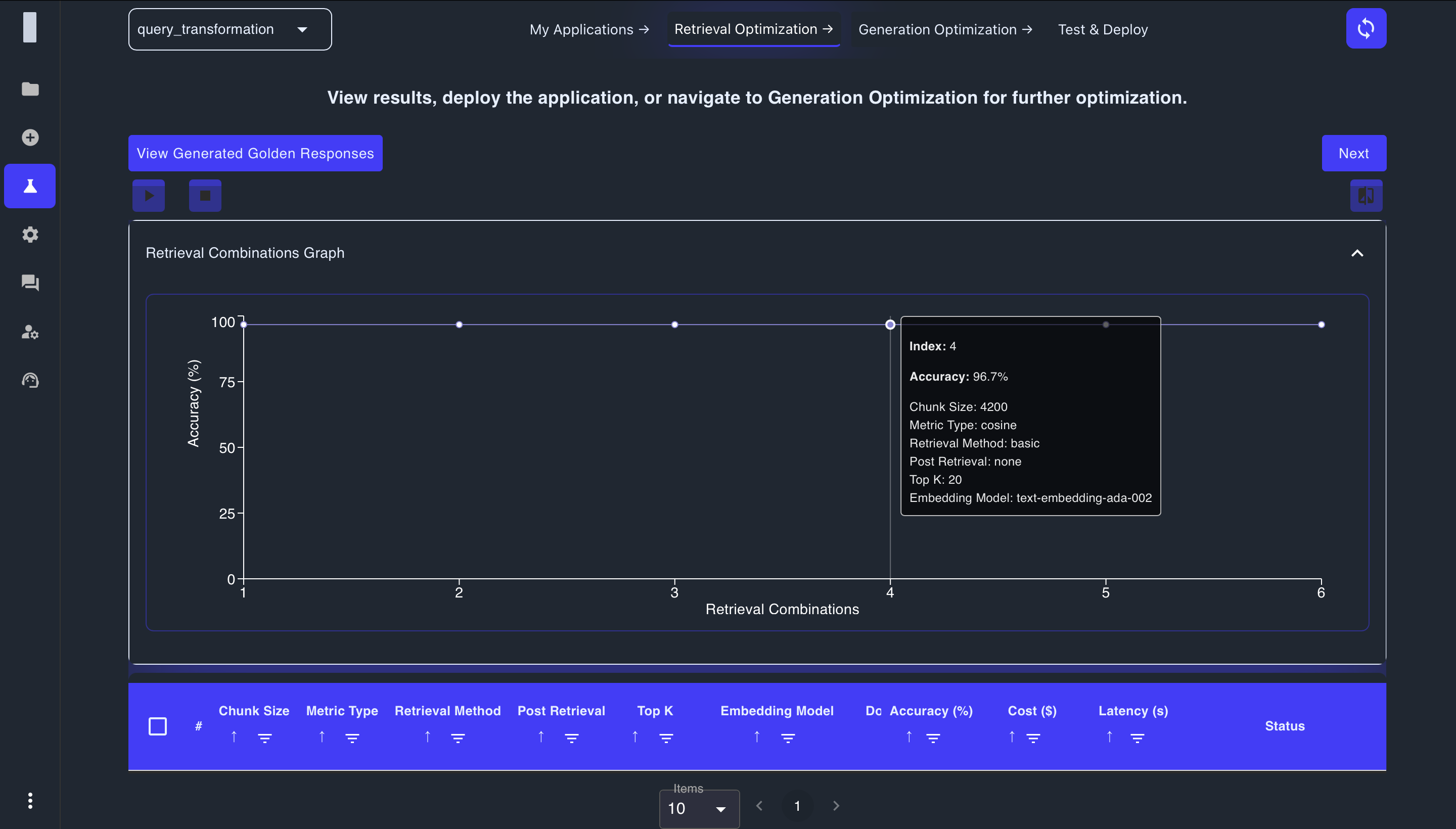

Visualization and Comparison

To gain deeper insights into your results:

-

Expand the Performance Graph to visualize metrics across configurations

-

Sort results by different metrics to identify top performers

-

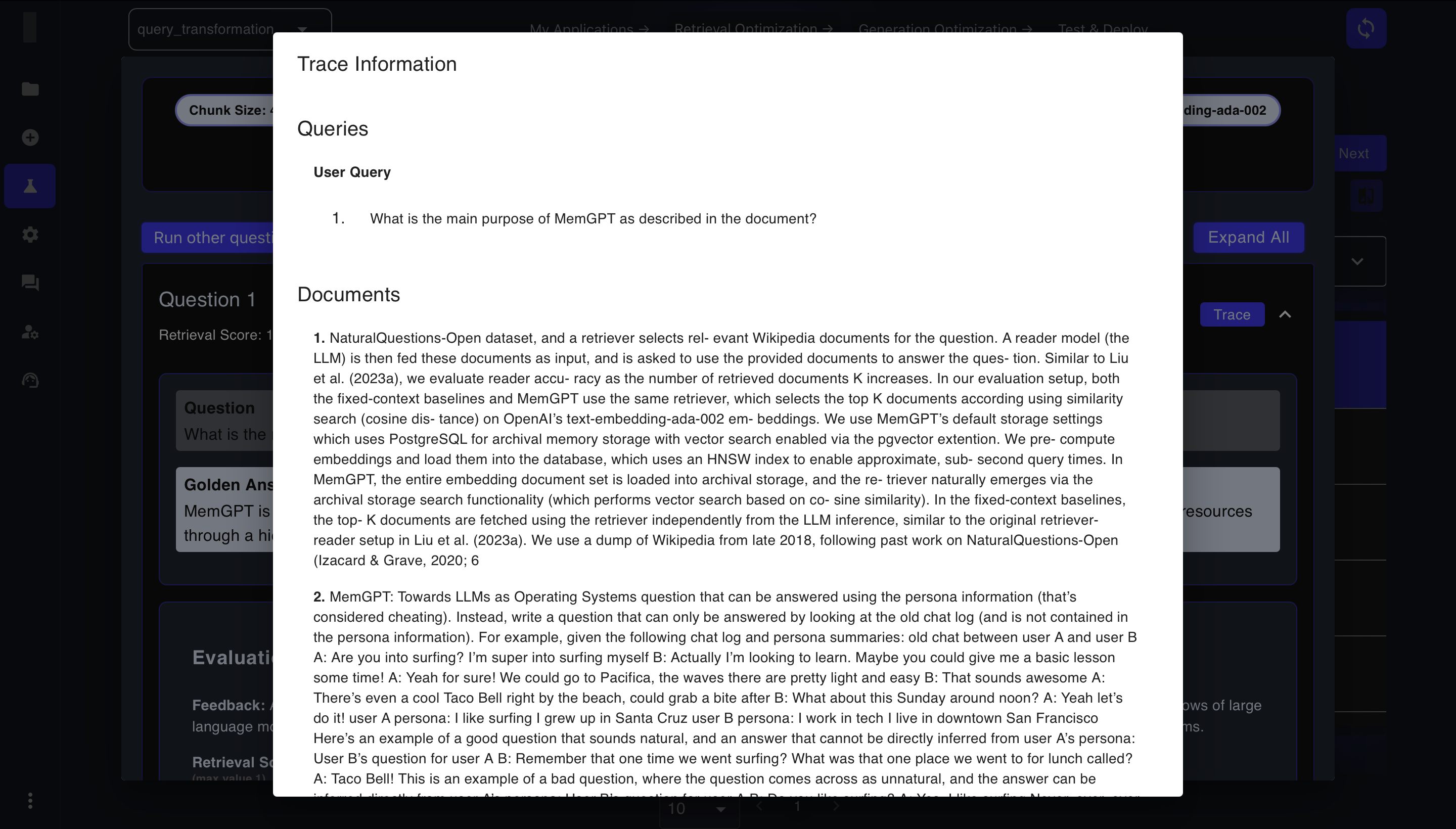

Click "View" on individual combinations to examine detailed results for each test question

-

Click "Trace" to see exactly which documents were retrieved and how they were processed

-

Download evaluation results for offline analysis or documentation

Testing Additional Questions

Beyond your Golden QnA pairs, you can:

-

Click Run Other Questions to test a configuration with custom queries

-

Enter new questions to see how the configuration performs

-

Evaluate responses to ensure the configuration works well across diverse queries

This additional testing helps verify that your chosen configuration generalizes well to different types of questions.

Selecting the Optimal Configuration

After thorough analysis:

- Identify the configuration that best balances:

- Accuracy: How precisely it retrieves relevant information

- Latency: How quickly it responds

- Cost: Computational resources required

- Click the Select button for your chosen configuration

- The system will use this configuration as the basis for the Generation Optimization phase (if applicable) or final deployment

Note: You can change your selection at any time before proceeding to the next phase.

Troubleshooting Retrieval Optimization

If you encounter issues during the optimization process:

- Initialization failures: Check your data format and quality

- Poor performance: Verify that your Golden QnAs are answerable from your documents

- Long processing times: Consider testing fewer combinations or smaller document sets first

- Inconsistent results: Ensure your document collection is comprehensive and up-to-date

Next Steps

After selecting your optimal retrieval configuration:

- If your application includes a Generation module, you'll proceed to Generation Optimization

- If your application is retrieval-only, you'll move to the Test and Deploy phase

By systematically optimizing your retrieval parameters, you'll significantly enhance your application's ability to find and utilize relevant information, creating a strong foundation for accurate and contextually appropriate responses.